Aide is now the SOTA on swebench-verified, resolving 62.2% of the issues on the benchmark. We did this by scaling our agent on test time inference and re-learning the bitter lesson.

> The biggest lesson that can be read from 70 years of AI research is that general methods that leverage computation are ultimately the most effective, and by a large margin.

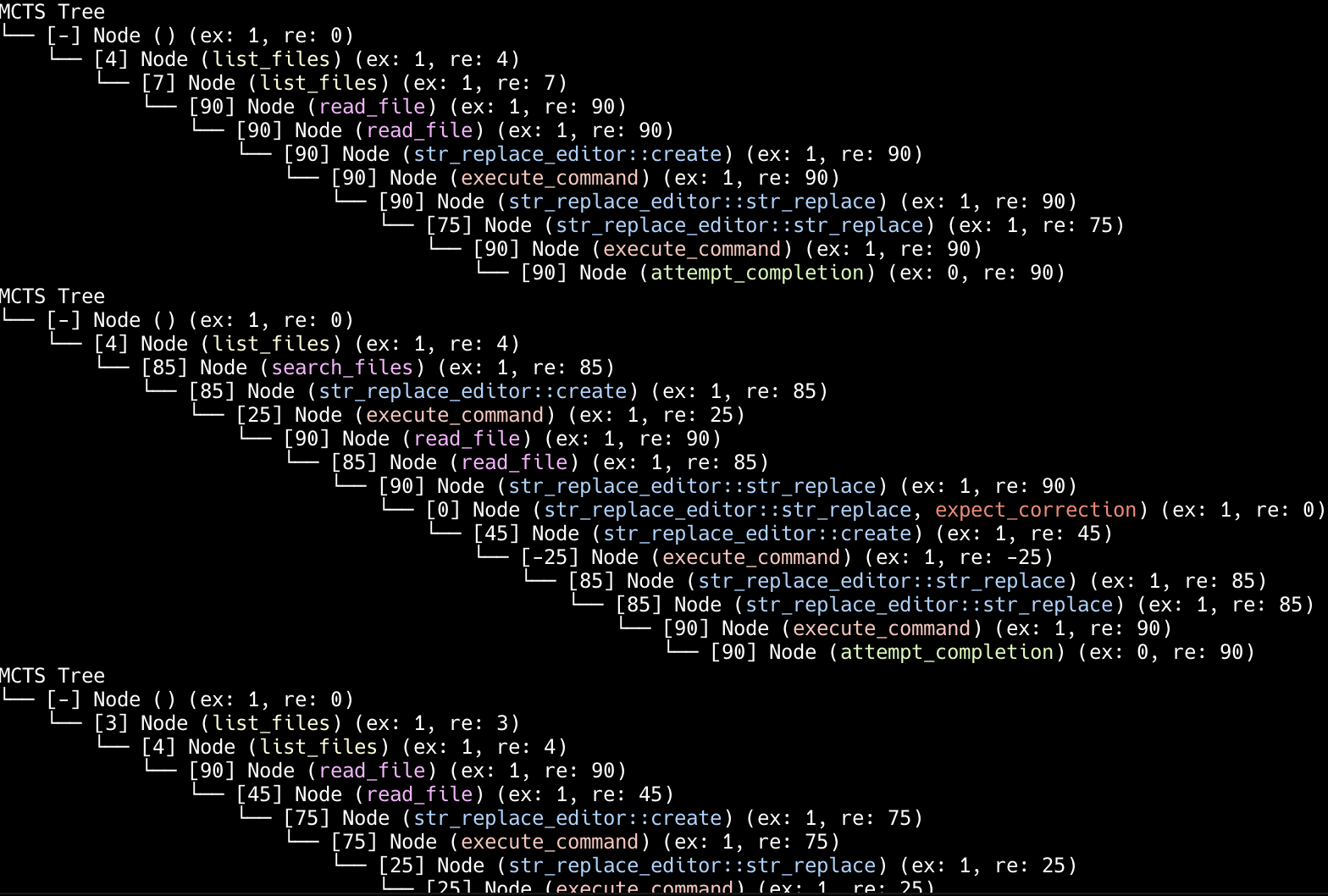

In the midst of this exploration, we also developed an MCTS framework for general software engineering challenges, which we later dropped in favour of test-time scaling. We used Sonnet 3.5 as the only LLM in our testing and were able to improve its performance from 26%!

Agent setup

To kick-start the agentic setup, we selected a few basic tools which our agent had access to. Instead of engineering too much on the tools, we decided on picking the simplest of the tools and used that to power our agent. We ended up giving the agent access to the following tools:

- List Files

- Open File

- Str_replace_editor (Sonnet is pre-trained on this tool)

- Attempt Completion

- RipGrep search

- Terminal Access

The only prior setup we had made at our end was to ensure the prerequisite set of python packages were available as part of the docker container which swebench-verified uses, thus eliminating the need for the agent to spend cycles just to fix environment problems.

We also used Sonnet 3.5 to assign a reward to the agent’s tool-use output. The reward scaling was heavily inspired by the work done by SWE-search team to get the LLM to generate reward for each action taken. The reward rubric is tool-specific, and represents how effective the step was to solving the GitHub issue. For example, if the agent chooses to read a file that turns out to be irrelevant to solving the problem, that step is given a low reward. However, if the next file it chooses to read contains a critical clue, that step would be assigned a higher reward.

On top of this, after each step, the agent generates 1) an observation, and 2) an alternative action it could have taken. This gives the LLM the freedom to explore other promising approaches based on what it has seen, even if it were already deep within a trajectory.

At the end of a trajectory, we calculate the mean reward of the trajectory’s steps, which represents the trajectory’s effectiveness in solving the problem. The score’s calculation is consistent across trajectory length, meaning both long (≥ 20 steps) or short (≤ 10 steps) ones are given equal preference when selecting the trajectory for submission.

One of our most important learnings was that Sonnet 3.5 is a serious workhorse. With our reward scaling in place, it would push through bad steps and still find ways to solve the problems, despite not having test execution capability.

Below is a diagram which shows the number of steps Sonnet 3.5 took to solve a given problem in swebench-verified, with the highest being around 40.

MCTS Tree

Midwit agent x3

We implore you to read the bitter lesson, the TL;DR, for which is: scale beats everything.

Instead of focusing on developing the best framework and being smart, we invested heavily in our infra setup (2 beefy VMs and 2 MacBook Pro M2 Max’s), all churning out tokens within the docker containers for solving swe-bench. The key driving principle, which Google also hinted at with their Gemini 2.0 Flash, AlphaGo, AlphaChess, and AlphaMaths releases, is that with scale, you can absolutely crush any problem.

We noticed similar behaviour when running the agent multiple times in our testing on user queries. Given enough compute, a decent LLM would find the answer without needing interaction with the user. Our goal here is to give as much control over to the Language Model and let it dictate the trajectory instead of the framework itself. Instead of constraining the Language Model, the framework served to scale it.

Our insight from running test time scaling is that the non-deterministic nature of LLMs requires a framework which exploits this nature via scaling and exhausting the solution space the LLM has access to instead of constraining it.

We allowed the framework to run and generate at most 5 trajectories (which was within the token budget we were working with).

Having said that, we had various challenges as a small team in scaling the test time compute.

Challenges as a small team

Scaling in a compute rich environment is more straightforward, not if you are a small startup who is token starved.

We created multiple Anthropic accounts (hush…) and multiple VMs to scale out inference. This allowed us to extract the maximum tokens per second from Anthropic without running into significant errors while running the benchmark, and helped us iterate faster on the benchmark.

PS: We also brought down the network from the office space we were working on while running the experiment, this was not fun for everyone involved but supremely satisfying.

Sadly, even after putting ourselves in a token rich environment, we still got the occasional rate limits which lead to lower scores than what we would have ideally got.

SWE-search and MCTS

The research paper "SWE-Search: Enhancing Software Agents with Monte Carlo Tree Search and Iterative Refinement"[1] illustrates the efficacy of integrating MCTS with a reward function and feedback mechanisms for navigating the solution space. Their study reports a 23% relative performance improvement across five models when applying MCTS-guided strategies, underscoring the significance of structured exploration and strategic orchestration in search processes.

Originally, we used MCTS as a framework for our agent to conduct structured exploration of the solution space for a problem. We quickly realized that while MCTS sounded great and discovered novel solutions, it would take too long to finish the task (often more than 1 hour). It wasn’t clear that MCTS was delivering greater net accuracy per unit time compared to parallel, single-trajectory runs. So, we compared the two approaches, and the latter was consistently stronger.

In light of this discovery, we decided to stop pursuing MCTS as a framework but kept the good parts, the feedback generation and reward scaling, to build our super simple framework whose performance seemed to scale consistently with more compute.

Test Time Compute

With o1 models and many providers doing native inference time scaling, the writing is on the wall that spending more compute on inference leads to better results on any given task. Alpha (Go|Code|Maths|Chess) have already proved that for their respective domain. With our SOTA submission, we want to further highlight this aspect.

We also learned that once the developer environment is set up, we could run as many agents as we wanted (we call it industrialization of intelligence), and brute force your way to the solution.

We at CodeStory are surprised and amazed at this learning. The surprise comes from the fact that for the better half of this year, we figured algorithmic smartness would yield better results. And we were left amazed at the learning that scaling inference could and does yield results which are SOTA.

Parting thoughts

With the bitter lesson ringing true in our minds, now is as good a time to think about how software engineering will change with the coming of AI agents.

I, personally, remember at times at Facebook when a SEV would require the whole team of engineers to take different strands to debug the issue, all of this feels trivial now that we can have 1000X the agents running in the cloud trying out different solutions to solve the problem.

The idea that the dev environment can be duplicated so simply also implies that enterprises with a unified build and test environment like Buck2/Bazel/uv/cargo/yarn/npm would benefit immensely since a developer environment replication can be done in under a second. On the other hand, for the “real” real world, VM snapshotting and just having another checkout locally is an option as well, allowing you to enjoy the industrialization of intelligence and software engineering which is coming in hot.

As a team who are heads down building an editor and deploying agents running locally, we are supremely excited by the learning. The 5-minute range of tasks are real and done everyday by developers, the question of where and how it will be solved is an interesting aspect which we want to further explore.

For now, you can enjoy the same agent in our extension offering and very soon in our editor!

I hope this post and the experiment inspires you to look back at the exponential intelligence growth we have and tackle the domain-specific problems in the dumbest possible way with scale working in your favour.

Citations

[1]: Antonis Antoniades, Örwall, A., Zhang, K., Xie, Y., Goyal, A., & Wang, W. (2024). SWE-Search: Enhancing software agents with Monte Carlo tree search and iterative refinement. arXiv. https://arxiv.org/abs/2410.20285