Aide for enterprises.

Empower them with the most secure and best AI-assisted coding experience today.

The IDE is the most used product for developers.

Want to make your developers more productive when they're focused on writing code? Want to ensure AI adoption in your organisation is done securely, and without breaking any existing workflows? Want to provide the best-in-class AI models for your team?

Aide, by design, runs all the infra bits on the developer's machine. It's just like using any other software. This enables Aide to work with your codebase no matter which hosting service you use: Github, BitBucket, GitLab, Azure DevOps, or Perforce. Aide is a drop-in replacement for the editor you are using, and all your workflows work today out of the box with Aide.

The only thing that Aide needs to run is the model. You can choose to run the model on your infrastructure, or use our cloud offering. If you choose to run the model on your infrastructure, Aide will work with your existing security and compliance policies. CodeStory also provides a cloud offering for the model, which can host coding models on your cloud of choice. Speak to us about specific audits and certifications that you need for your organisation.

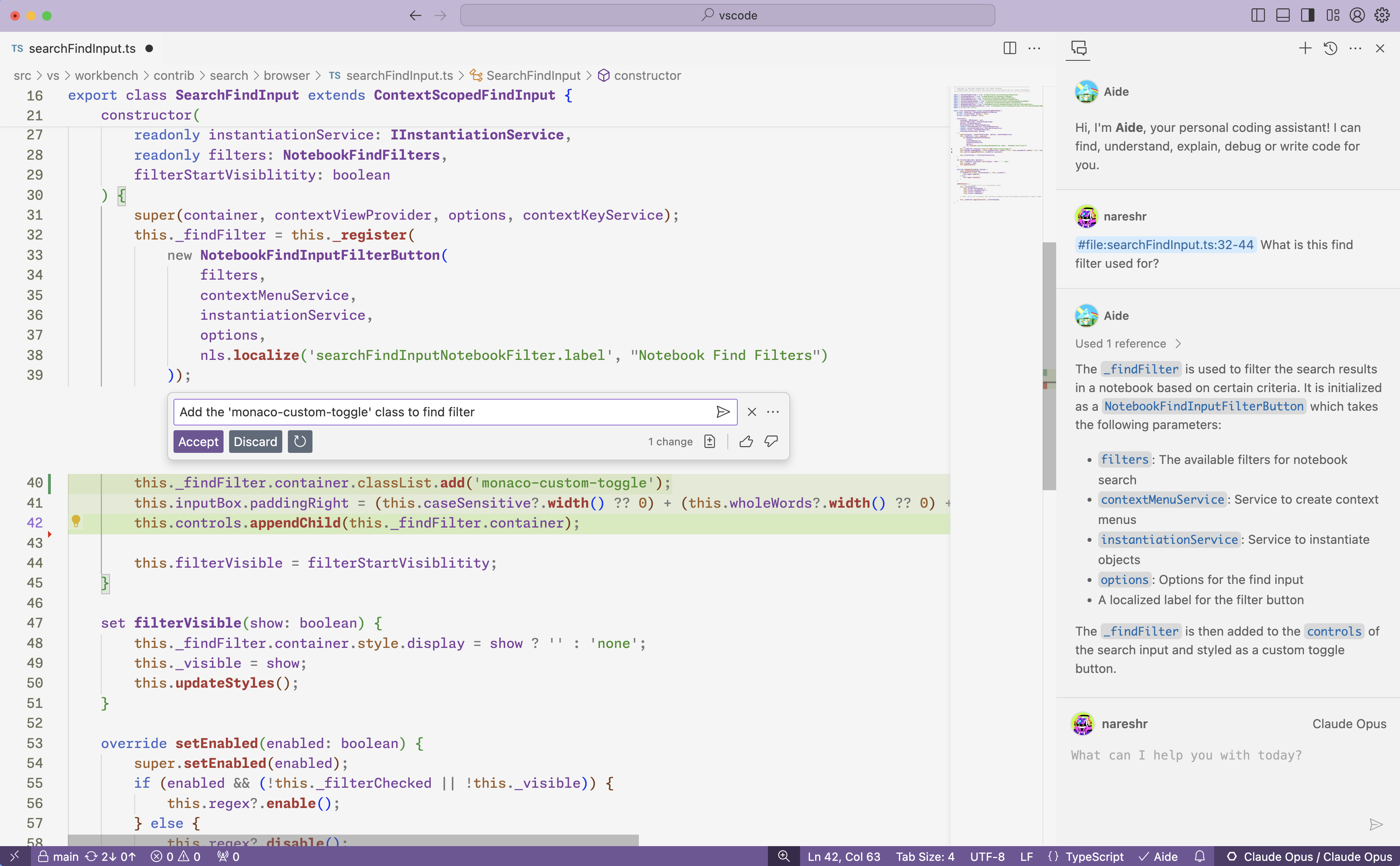

Fine-tuning on your codebase allows for better tab-autocomplete and better context gathering for your codebases. The model is yours forever to keep and use as you see fit. Fine-tuning also helps the bigger model understand the intricacies of your ever-changing codebase, helping developers stay productive in a fast moving environment. Our fine-tuned models support custom connectors to your Jira/Confluence/Github Issues, so it knows not just about the code, but the context around it.

Enterprises can run Anthropic Calude models via AWS Bedrock or with Azure OpenAI. Aide also supports running Open Source Models like CodeLlama or DeepSeek Coder on your own GPUs, or have developers run the models on their own laptop via Ollama or LMStudio.